CONCEPTUAL NOTE:

When we pass someone while walking down a hallway, we incur fleeting interactions full of complex social evaluation— assesments of personality, sexuality, and socio-economic status. These off-the-cuff evaluations are unfounded yet automatic, as subjective impressions inform our quick construction of the life of a stranger.

The idea behind Only if you're there (I'll meet you there) is an attempt to separate biased and confounding evaluations from the simple act of a chance encounter, of two travelers passing each other along an interior pathway. Is it possible for such a simple event to be devoid of social (mis)evaluation and schematic judgment? By creating a musical encounter between two spatially separated individuals, this installation explores these distinctions. The musical system constructs an indirect encounter, occurring within a disembodied aural space. It exists along the pathway, yet is not confined or perhaps even salient to its subjects.

As a single pedestrian interacts with one of the work's two sonar sensor systems, her distance from the sensor array controls the amplitude of ambient inharmonic sound. The interaction of only one pedestrian with the installation enables the musical system to become discoverable. Yet ultimately, this interaction just serves as a baseline to facilitate perceptual comparison to the musical effect of simultaneous interaction occurring at both spatially separated sonar sensor sites. When there are two individuals walking through each sensor site simultaneously, the system cross fades the ambient inharmonic sound into clear harmonic tones. The frequencies and amplitudes of these tones are individually controlled by the relative movements of each person's distance to the given sensor array.

The design of this musical installation maintains the normalcy, nuance, and chance that define each passing encounter of pedestrian traffic. The physical discontinuity between two simultaneous points of audience interaction with the musical system creates the possibility for a virtual, aural space for interaction to emerge. Here, spatially separated individuals interact through a musical intermediary. This human encounter is devoid of social evaluation; there are no fractions of conversations to take into account, there are no fashion trends to critique, and there is no body language to interpret. The installation confronts an individual's ability to search for, parse, and even project the humanity existing within the sound. The audience feels the music by experiencing the subtle interaction of sound and space. Upon discovering the installation's sound, like fragments of speech or a person's clothing, it has a desire to be evaluated and attributed to a particular social dynamic: a composer's intervention, the movements of two pedestrians passing one another, or even one's own projection of musicality onto the aural space.

TECHNICAL NOTE:

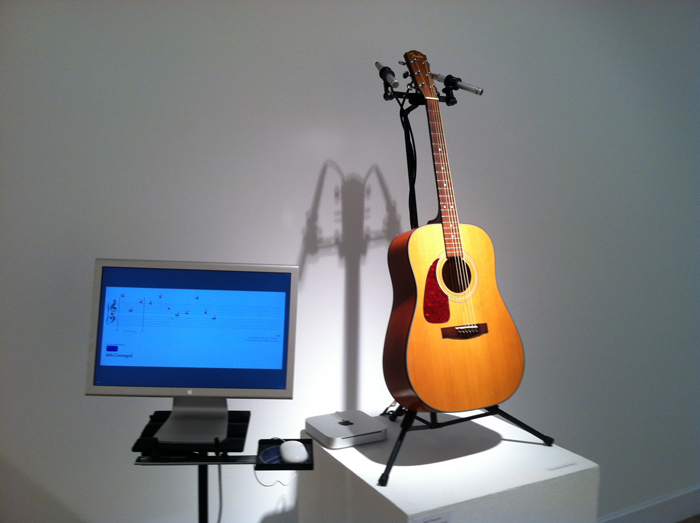

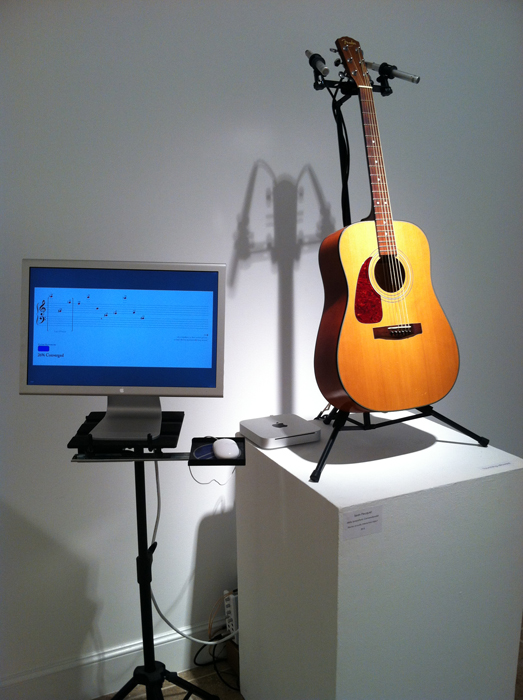

Hardware Components:

• 2 AVRLinx V1.1 AVR/Radio Board (Procyon Engineering)

• 6 MaxBotix LV-MaxSonar-EZ1 ultrasound range finders

• 1 ENC28J60-H 10Mbit Ethernet-Interface breakout board

Sonar Arrays:

Three Maxbotix EZ-1 ultrasound range finders are used for each array. For each array, the sensors' readings are sampled by an Atmega32 AVR Microcontroller with a CPU crystal running at 14.745 mhz. The microcontroller chips are integrated into an AVRLinx Radio Board (v1.0) (developed by Pascal Stang). Radio Board A was programmed to sample its three ultrasound sensors and then transmit the readings wirelessly via radio frequency to Radio Board B. Radio Board B is programmed to sample its three ultrasound sensors and integrate the readings with Radio Board A's wirelessly received readings. Radio Board B then sends all data into a Max/MSP patch running on a laptop computer via Open Sound Control messages (sent through an ENC28J60-H Ethernet header board).

Computer Processing:

A Max/MSP patch serves as the control interface for the sonar sensors. Sensor data is mapped within the patch to control the amplitude of inharmonic sound. The inharmonic sound is derived from processed sound samples. These samples were processed ahead of time using spectral filtering and delay software that I had previously developed. Inharmonic sound is output when only one of the sensor arrays demonstrates fluctuations in its distance readings, indicating movement. If movement was simultaneously detected by each sensor array, then the program crossfades the sound output, from processed, inharmonic samples to harmonic triangle waves. The sensor data then controls the amplitude level of the triangle waves. The frequencies of the tones, for each sensor array, were stacked Pythagorean 5ths. Sensor array A and B are separated in frequency by a Pythagorean 4th.

• Sensor Array A: 466.67 hz, 700 hz, and 1050 hz.

• Sensor Array B: 350 hz, 525 hz, and 787.5 hz.

Parabolic Loudspeakers:

Six parabolic loudspeakers are used to play the computer-generated sound. Three speakers are used per site. Speaker placement coincides with the direction in which each of the sonar sensors are pointed. The parabolic speakers were crafted by hand, using some prefabricated elements. Each speaker's parabolic reflector is 18 inches in diameter. The speakers have 5 watt power and 8 ohm resistance drivers. Both the speaker cabinets and the frame holding the parabolic reflectors are made of wood. The speakers are powered by mono 7 watt amplifiers, which I assembled.

Installation Space:

Only if you're there (I'll meet you there) should be installed along an interior pathway, such as at two ends of an interior hallway (approximately 50 yards apart). The ultrasound sensor arrays should be positioned such that each individual sensor can detect movement from a different direction.